Watson Assistant: Improve Assistant Performance

The ask: Allow the Tanya persona, a non-technical content owner of an assistant (chatbot), to understand and improve the performance of her assistant.

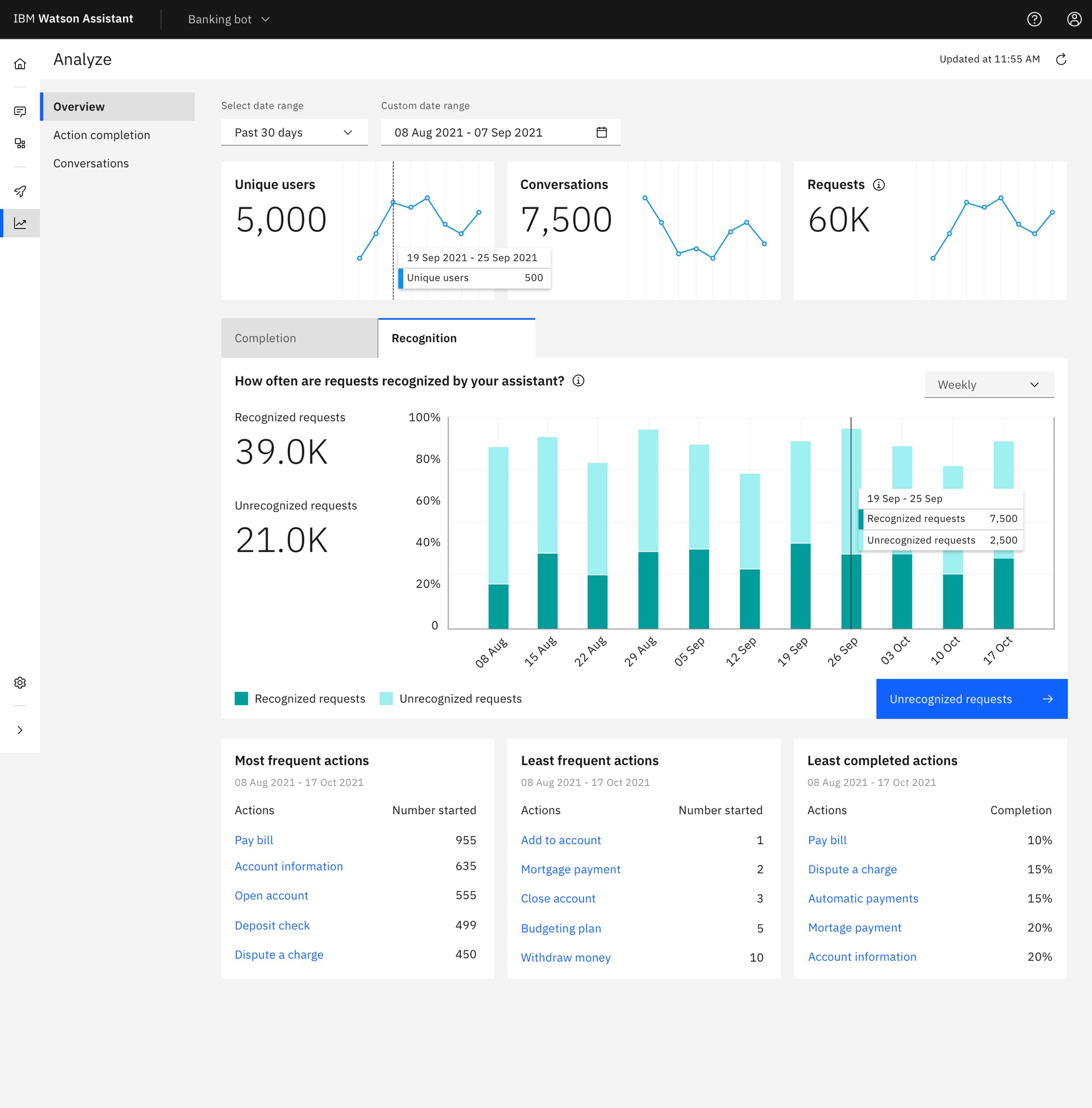

We needed to create a new analytics experience for a new and easier way of building assistants. This new way of building allowed us to incorporate smarter tools to provide insight for Tanyas on how well her assistant recognizes customer requests and once that request starts an action (conversational flow), if customers were able to complete that action, and if they didn’t complete the action, when and how it failed.

The team: Design lead (me), Design Apprentice (my mentee), Product Manager, Engineering Lead, 2 Engineers

The timeline: 6 months

The process: Stakeholder Management, Design Thinking Facilitation, Sketching, Wireframing, Iterative Prototyping.

The outcome: Released, impact not measured before I left IBM.

I was the design lead for this project. This was my first project on Watson Assistant. I spent a lot of my time initially having meetings with stakeholders and my Product Manager counterpart trying to understand how Watson Assistant works and what they needed out of an analytics platform. This is also my first attempt at building an analytics tool.

I facilitated a design thinking workshop to get team alignment on what we were trying to build. Then used rapid iteration techniques to visualize our ideas and refine them. We were unable to test with users so we relied on our collective knowledge to develop an MVP that captured all of our assumptions to hopefully in the future validate with users and refine further.

I lost some files that showed more of my process and synthesis of our conversations. I will do my best to fill in the gaps with explanations along the way.

Workshop and Alignment as Team

I led a 3-in-a-box workshop (Design, Development, Product Manager) to align on the best analytics experience we can provide Tanya (a non-technical content owner for an assistant). We synthesized the workshop into a to-be scenario. What we want the future experience to be at a high level. This is based on previous research, client meetings, and assumptions by our team. But that’s alright, that’s why we test. This is a foundation to build upon.

We decided as a team to Tanya with two key metrics to start. These two metrics were heavily influenced by the technology that engineering had developed prior to me joining the team. It started out as containment and coverage. These were eventually changed to recognition and completion. The two metrics were how well was her assistant recognizing customer requests (user message). If a request was recognized, then were they able to complete the conversation (quality of conversation) and if not, what was the reason. If a request was unrecognized, give Tanya insight into what to build next (breadth of conversation).

Initial sketches

I then took the journey map and the information that I had gathered from engineering and product management and started to piece together my understanding of the direction through sketching. These were also helpful with ad-hoc meetings to make sure I was on the same page as my team before going into a higher fidelity. We were able to make early and often and facilitate discussions as a 3-in-a-box team.

Mid-fidelity Prototype

I then created a mid-fidelity prototype (Sketch + Invision) based on feedback from Offering Management and Development. The purpose of this prototype was to test with users representative of our Tanya persona. Does this fit their needs? What gaps are there in the experience? Is this usable? Sadly, testing was de-prioritized for this release b/c of development cycle. So this turned into a handoff for development to start working on. I had to rely on feedback from my team and leverage the broader design team for critique.

However, even without testing with users we were able to figure out some of the details. I am missing the many cycles of iteration that was done here. We made significant changes to the action completion flow, figuring out the content we wanted to show and how. We figured out how to show the aggregate completion for the action. Then how users can dig deeper to see specific instances of a complete or incomplete action and why. Then how users can dig even deeper to view the full conversation for even more troubleshooting.

Prototype: https://ibm.invisionapp.com/console/share/D3O1XF6KXNZ/971654452

Scoped MVP for release

The design had to be scoped down for release. So I worked with the Offering Manager and Development team to cut the design into 3 phases. This is the minimum-viable product we agreed upon for release. Phases 2 and 3 would be implemented later. This also represents the changes based on feedback from my immediate team and the broader design team.

Prototype: https://ibm.invisionapp.com/share/Y4O1DQ0N5EW#/320045723_Analytics_Overview_Concept_5_Copy_30

Fast Follow: Annotations

The last piece of design work I was responsible for on this team (fast follow after release) was to provide in-line annotations to help Tanya understand where a conversation broke down and why. This is the deepest level of analysis for Tanya. She has chosen to improve the completion rate of a specific action (topic of conversation) and wants to understand why the conversation was complete or not complete.

Apologies for the pixelated image. This is all I have access to.